Tech Things: OpenAI is an unaligned agent, Part 2

AGI — short for artificial general intelligence — is a concept that is constantly shifting meaning.

If you asked someone 100 years ago for a good benchmark on AGI, they may say 'well, surely you cannot play chess if you are not a reasoning, rational agent'. Except, you know, you can. And if you asked someone 20 years ago for a good benchmark on AGI, they may say 'well, surely you cannot pass the turing test if you are not a reasoning, rational agent'. Except, you know, you can. And if you asked someone 2 years ago for a good benchmark on AGI, they may say 'well, surely you cannot create beautiful works of art if you are not a reasoning, rational agent'. Except, you know, you can.

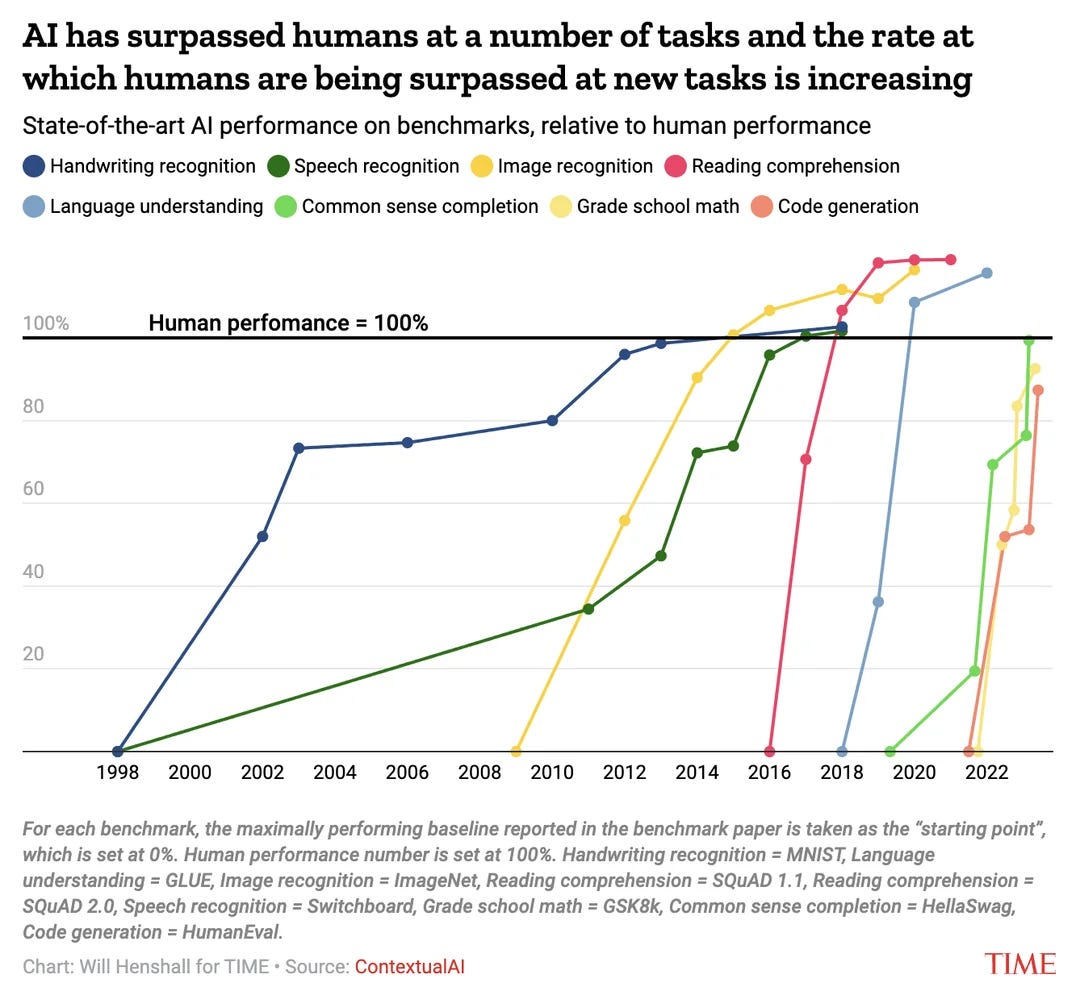

Take a look at these two charts. Like really internalize them.

In 2024, you have AI tools that aren't just better than humans at a wide range of basic tasks. They are starting to surpass experts in highly domain specific tasks. And you can access all of these tools on your web browser! When you're sitting on the toilet in the morning you can pull up on your phone an automated expert in literally everything!

And yet, the goal posts shift. "We haven't yet reached AGI!" experts say. "AGI is when you have robots that can juggle on a unicycle while reciting the Illiad in a cockney accent!" experts say.

For at least one company, though, the question of whether we have reached AGI is not just a semantics debate, but something of profound economic — even existential — importance. From Reuters:

OpenAI is in discussions to remove a clause that shuts Microsoft (MSFT.O) out of the start-up's most advanced models when it achieves "artificial general intelligence", as it seeks to unlock future investments, the Financial Times reported on Friday.

As per the current terms, when OpenAI creates AGI - defined as a "highly autonomous system that outperforms humans at most economically valuable work" - Microsoft's access to such a technology would be void.

The ChatGPT-maker is exploring removing the condition from its corporate structure, enabling Microsoft to continue investing in and accessing all OpenAI technology after AGI is achieved, the FT reported, citing people familiar with the matter.

…

The clause was included to protect the technology from being misused for commercial purposes, giving its ownership to OpenAI's non-profit board.

Yes, well. The board decides when AGI is reached, and also that definition directly impacts whether the company gets more funding. Maybe, just maybe, there's a conflict of interest here?

Except, wait, actually there didn't used to be a conflict of interest! There used to be an independent board that would be making decisions independent of financial interest! But that board got sacked, the new board wants to get rich(er), and OpenAI slides further away from its original goals of AI safety.

A few months ago I said:

A lot of AI alignment researchers tend to be real downers at parties. Mostly, they say things like "we can't even get companies or bureaucracies composed of humans to not be evil, look at what Enron/Facebook/BP/the government do every day, and those are all composed of humans that we can talk to! What hope do we have against AI?" And, you know, they're right but still not very fun to be around.

I don't personally take a side on whether OpenAI should or shouldn't be for profit or not. But I do think [OpenAI trying to become for-profit] is a fantastic and ironic example of exactly the kinds of things AI alignment researchers are so morose about. When OpenAI was created there were all these clever guard rails set up to make sure it would always 'be aligned' and 'do the right thing'. There's a controlling non profit board! There's a super alignment team! There's a ton of brand risk of people making fun of you and your company with snarky names like NopenAI or ClosedAI if you ever go back on your founding mission! Surely the organization will remain focused on safety over profit? And yet one by one, those guard rails have fallen.

One of the funnier things about being in Tech in 2024 is watching what feels like the entirety of Silicon Valley give up on any pretense of ethics in exchange for ludicrous amounts of cash. To be fair, it is really really ludicrous amounts of cash. But still! If that’s all it takes to create an unaligned human agent, we are, as the kids say, absolutely cooked.