Coding Agents can Manage Other Coding Agents

Test Driven Development is all you need

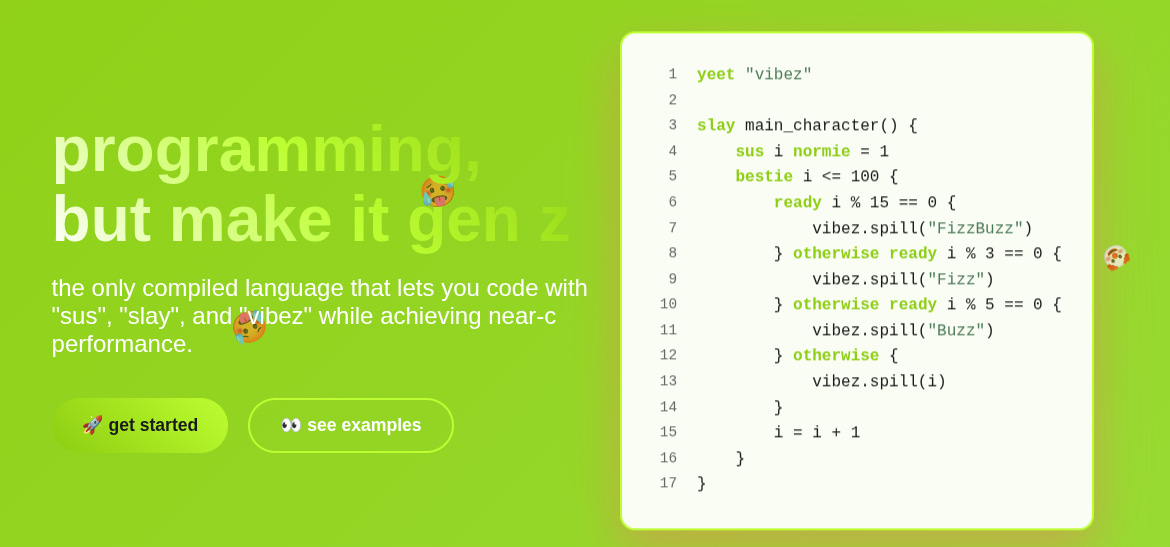

One of the best parts about my current job is that I get paid to think about all of the different ways I can stretch coding agents to do all sorts of neat things. Obviously, coding agents can write code. But they can do all sorts of other things, some mundane — you can have a coding agent watch your GitHub PRs until they turn green — and some extraordinary — you can have coding agents write entire programming languages.

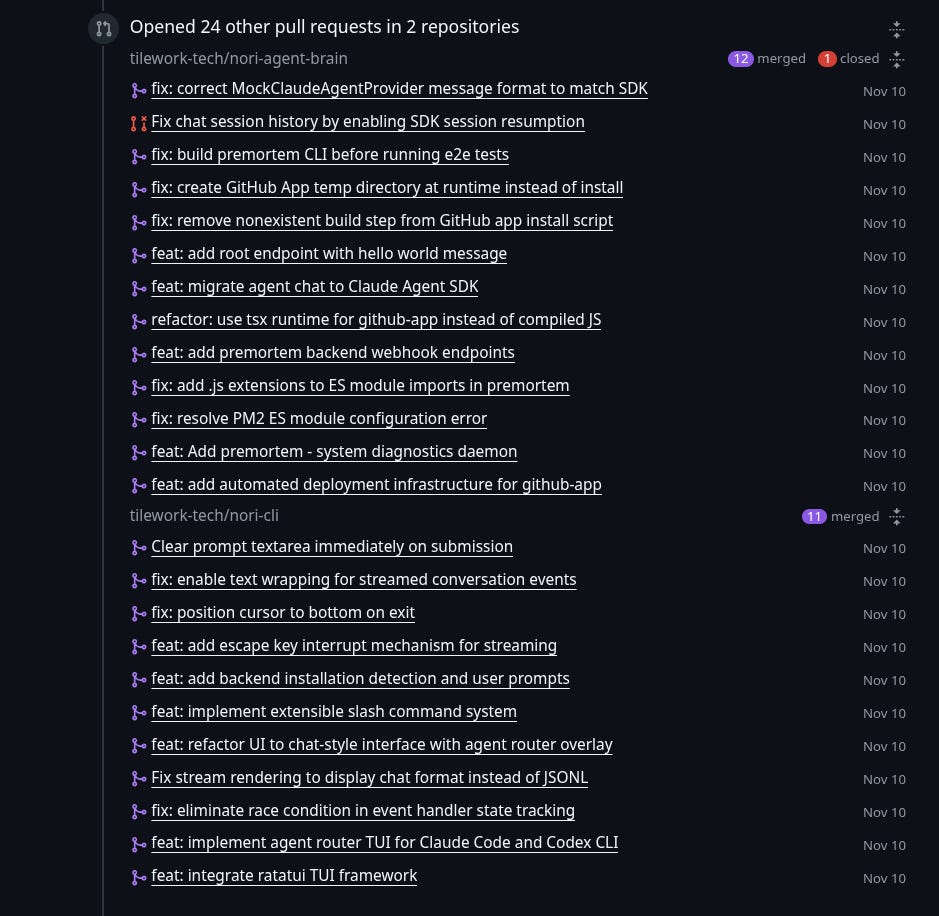

I’ve been embedding a lot of my expertise into my agents, which has led to some crazy velocity gains. My team of just me (and one other person soon, fingers crossed!) is averaging ten PRs shipped a day, and a few days ago I hit a record of 23 PRs landed. (If you’re curious, check out https://www.npmjs.com/package/nori-ai)

Averaging 10+ PRs a day with Claude Code

If you want to also be a Claude Code wizard, you can download all of my configs and embedded expertise at github or npm. It is easy to set up with a single command — npx nori-ai@latest install. If you are part of a larger team, reach out directly at

My current workflow essentially has me acting as a senior engineer, overseeing a team of juniors. I assign tasks to one of a pool of nori agents, and each one reports back with a PR. This works pretty well, but I have to keep a lot of state in my head for larger features. For example, if I want to scaffold out an entire new product, I need to assign one agent the backend, one agent the frontend, one agent the persistence, etc etc. I mostly can’t expect a single agent to do everything because it will eventually run into context window limitations.

I’m lazy. Very very lazy. So I started thinking about what would happen if I just had the agents do everything, from planning to writing code to farming out to other agents. Can a nori-enhanced coding agent act as coding agent manager?

TLDR: kinda!

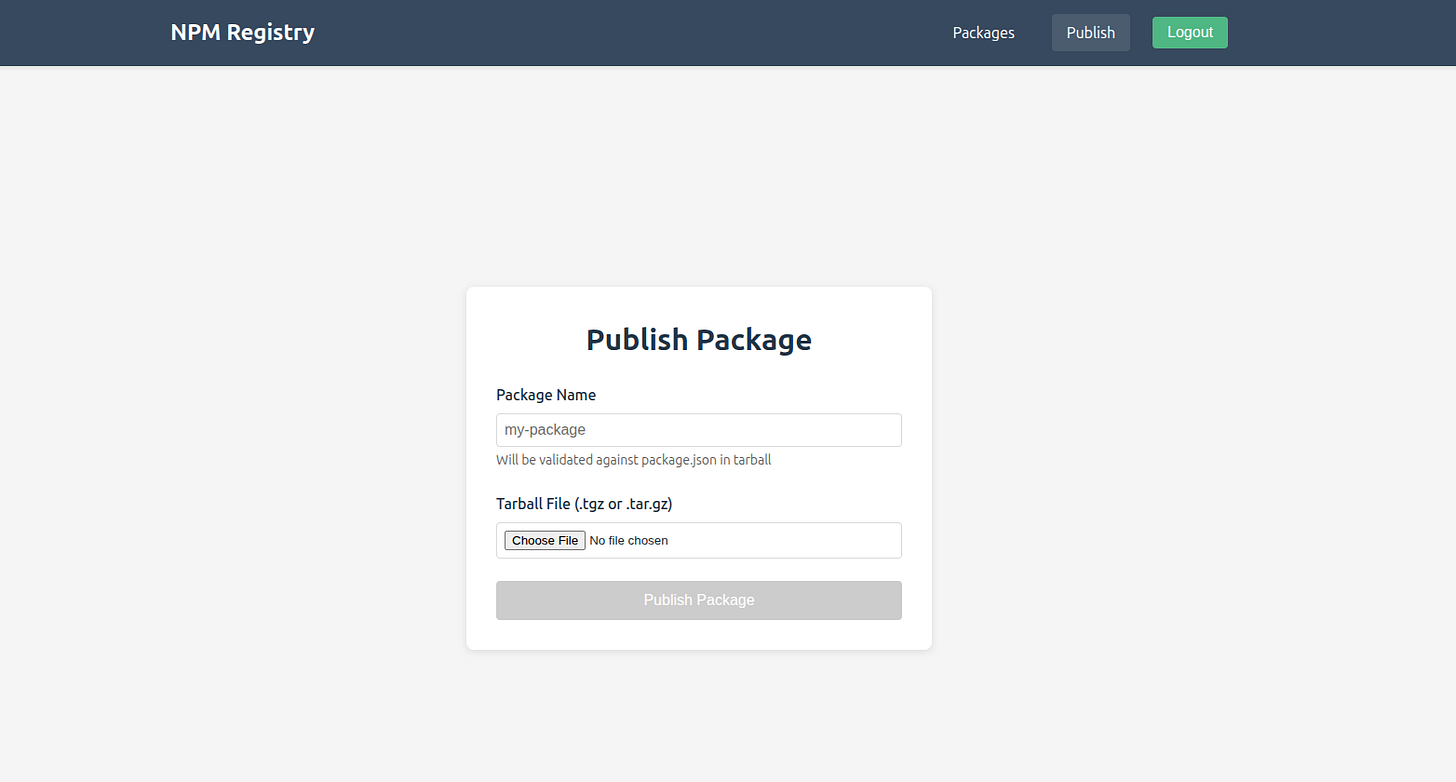

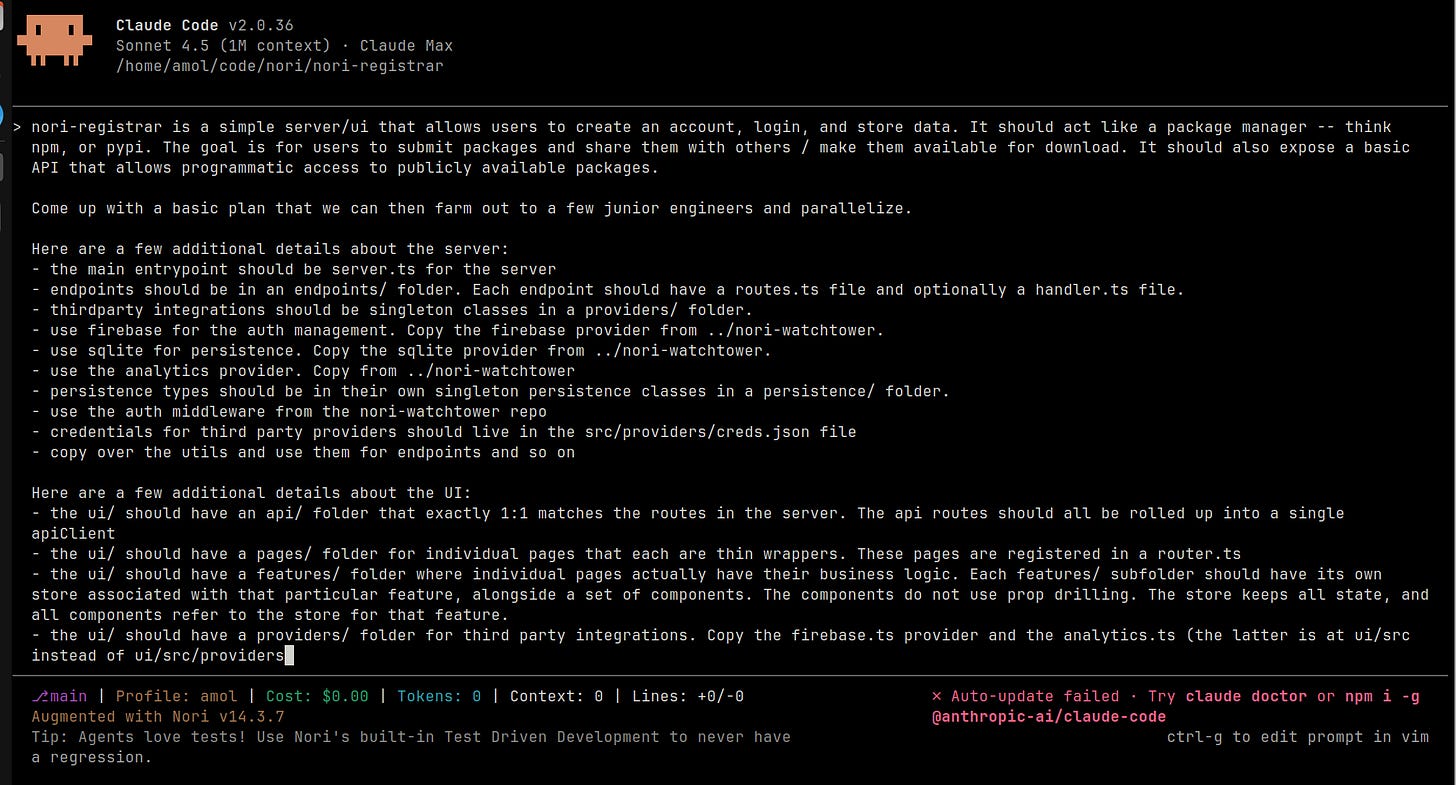

I asked nori to create a new profile registry site for nori profiles. This is a very basic crud app, the kind that the agents should have seen a lot of during training. But also, scaffolding a full service has a lot of moving parts to get the whole thing working. I told nori the stack — typescript, node, express, vue, SQLite, firebase auth — and then I had it write out a plan that could explicitly be parallelized. And finally, after approving the plan, I explicitly told it to use the Task tool to spin up subagents. I included a message on the main runner that it was responsible for making sure everything ran at the end of the process.

And then I let it run.

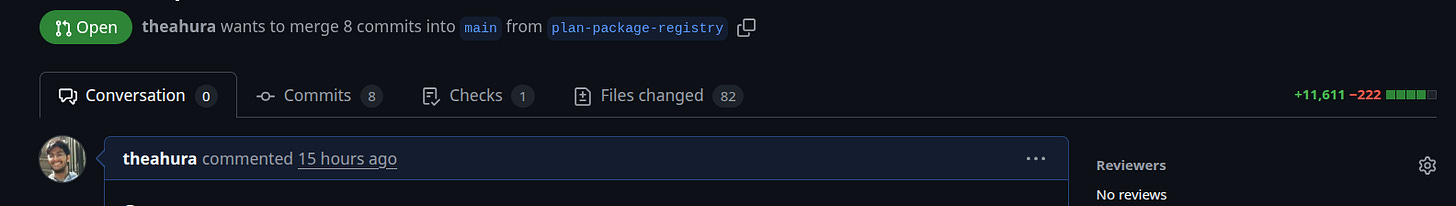

And boy did it run! I think the whole thing was chugging away for maybe an hour.1 But you know, that’s fine. I got to do other things with that hour, like land 9 other prs. By the time I returned I had a PR that had a whopping 82 files with 11000 lines changed.

🙃

Ok so reviewing this is not going to be fun, that’s my job today. But there’s a larger question looming — does the app work?

And the answer is yea, almost! The system didn’t set up a new firebase project on its own, so I had to manually go do that and provide credentials. But once I set that up I was able to

Log in

Upload profiles

Search for profiles

Download profiles

The app looks like shit. There’s not even a single ounce of aesthetic thought put into any of it. But scaffolding a full application in an hour while I can parallelize on other things is amazing.

I’m speculating here, but I think the only reason this worked is because nori is heavily configured to do TDD. That meant that the parent task runner was rigorously testing behavior while the children were keeping themselves on track using unit tests. The final repo has 152 vitest tests (including a few e2e playwright tests) and ~1000 lines of documentation!

Taking a step back, coding agents are just LLMs. And all LLMs are basically just playing a game of telephone with themselves. Each output token, erroneous or not, is taken as ground truth by the next iteration. So at first, it is mostly on track. But small errors compound, and eventually you lose the plot entirely. We call this ‘context rot’.

The reason tests are so important is because they are a method of formalizing end state goals at the very beginning of the process. You can think of the test as a feedback mechanism, a way to keep the model drawing inside the lines. If it ever goes too far, the test fails and the model readjusts. The result is that you can hand the agent tasks that are much longer context.

I’m still playing with the coding-agent-as-manager role and figuring out how to fully embed it into nori. I think it will likely be a SKILL, but it could straight up be a full nori profile on its own. If you’re interested in getting the latest, follow along at the nori-profiles repo (https://github.com/tilework-tech/nori-profiles). More to come.

I’m glad Claude Max exists because I probably ripped through a few hundred bucks in token cost.

Excuse my ignorance, but what is Nori?