Enshittification is our fault

Somewhat contra Paul Krugman

First, a story told through examples.

People say that Google Search has been getting worse. The most recent complaint is that the AI box at the top is often wrong or misleading. This is on the back of a long history of complaints about how Google prevents actual click-through to the original sources that power Google search.

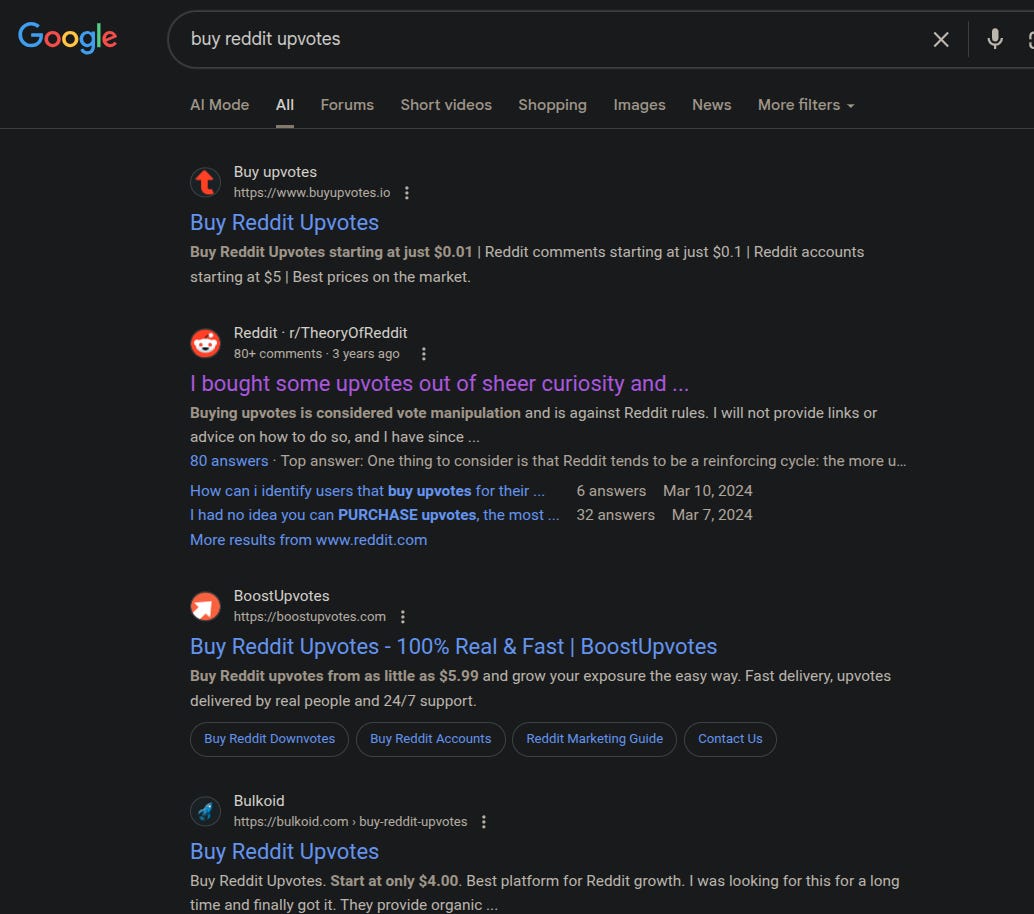

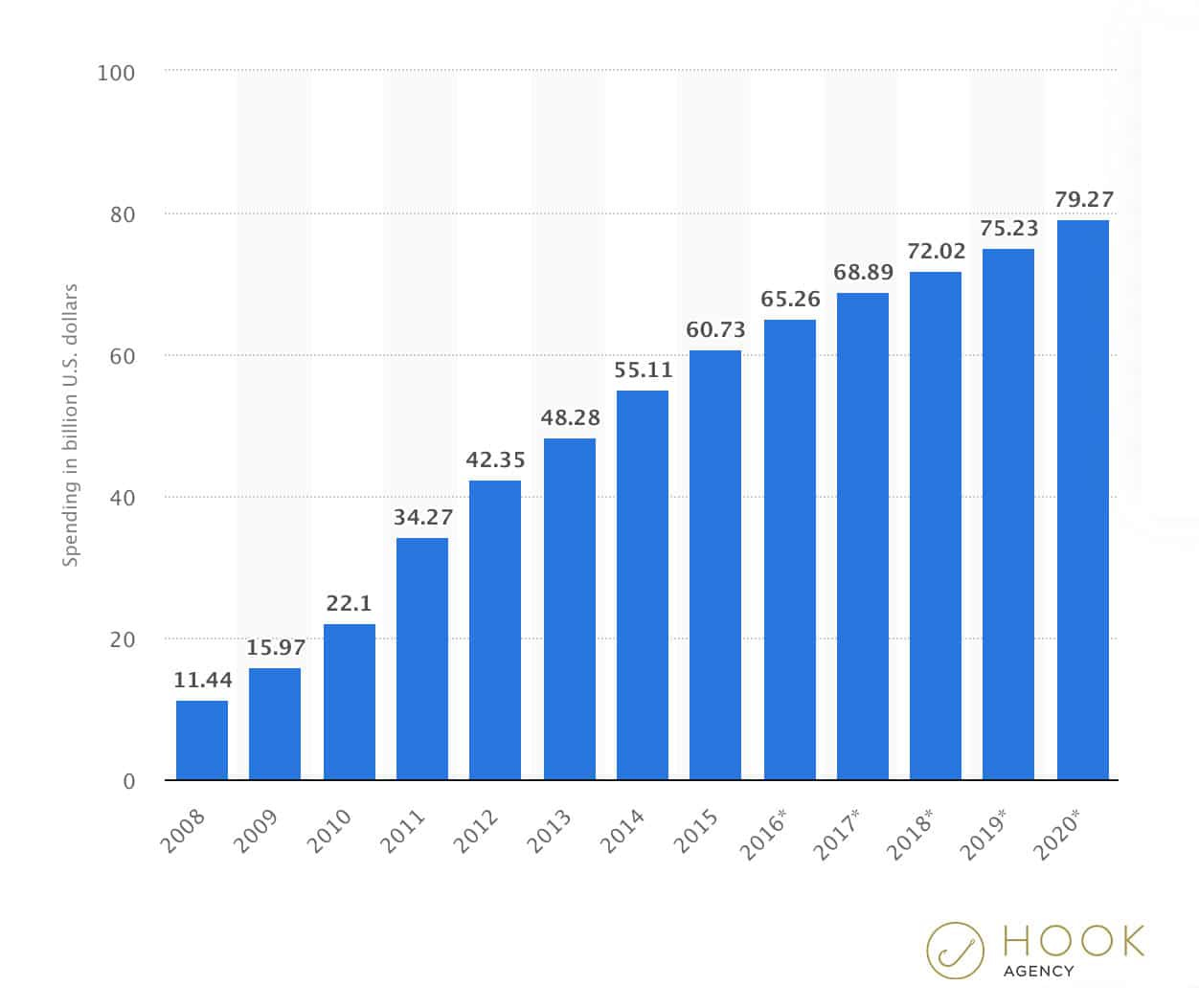

Also, SEO (search engine optimization) has gone from niche concept to full industry. There are, literally, billions of dollars spent trying to adversarially game search engine algorithms. This is seen as a necessary and good thing. Of course, if anyone was to ever actually perfect SEO, it would immediately break the search engine. There is virtually no difference between SEO and spam from the perspective of the search engine.

So Google Search did get worse. Some part of that is because Google wanted to capture more money. But some part of that is because we — the people who make websites and want those websites to do well — made it worse.

People say that Airbnb has been getting worse. Gone are the days of meeting cool hosts in unique places for steep discounts. Everything is cookie cutter. And everything costs way too much. Of particular note is the increase in cleaning fees, which has drawn the ire of many a traveler. "What is the point of a cleaning fee if I still have to do the cleaning?"

Also, here's the subreddit for Airbnb hosts. It is filled with horror stories from visitors who have outright destroyed the homes where they go to stay.

Also, hosts can really suck. Many individual hosts are bloodsucking leeches bad at their jobs. And there are dozens of companies that have rolled up rental properties for the explicit purpose of turning them into Airbnbs, so that they can squeeze travelers while providing shitty accommodations.

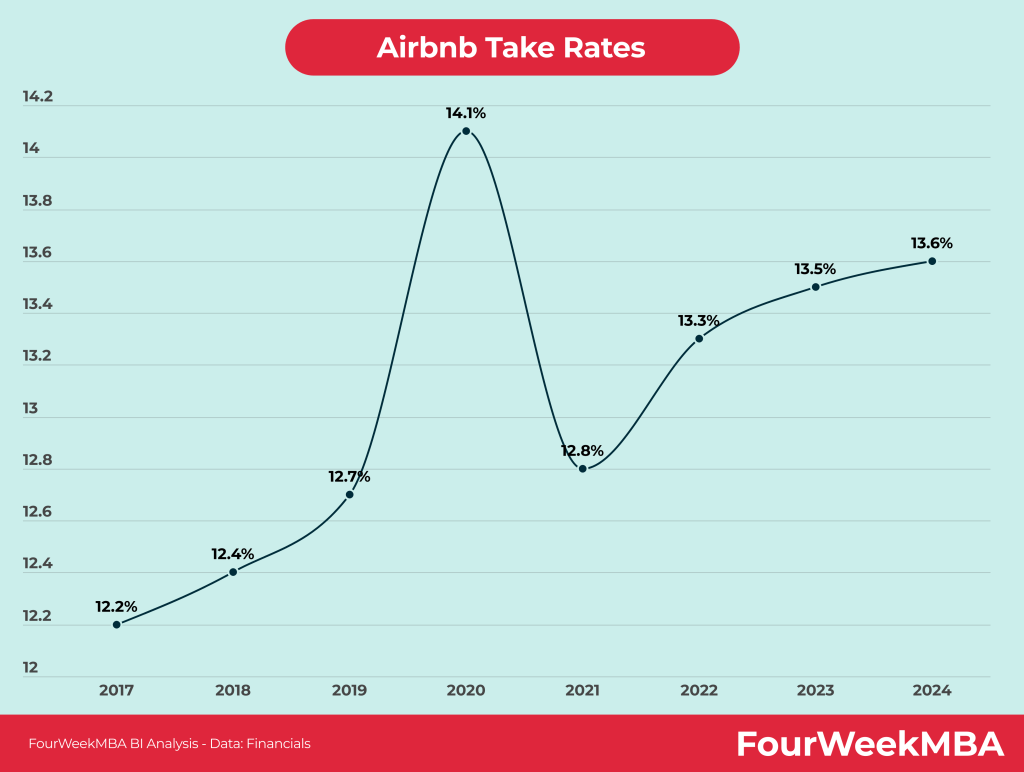

Meanwhile, Airbnb's take rate has fluctuated between 11-14%. It's been in that range since 2015, and is actually lower today than its peak in 2020.

So Airbnb did get worse. Some part of that is because Airbnb wanted to capture more value. But some part of that is because we — the people who host and want to make as much for as little, and the people who travel and want to get as much for as little — made it worse.

People say that Facebook has been getting worse. Actually, people say that anything with a feed has been getting worse. It's all algorithmic slop and karma farming and rehashes of the same tired memes. There's no interesting content. You only ever see what the algorithm wants you to see, and it's a shitty algorithm.

Also, people karma farm, hard. Everyone is individually trying to create the most viral content. Bot farms break decentralized voting systems, while people outright buy likes/upvotes/popular accounts behind the scenes.

Also, Facebook has run A/B tests where they disable the algorithmic feeds, replacing those feeds with chronological posts and comments from direct friends. Engagement plummeted, and every visible metric showed that people were having a worse time.1

Turning off the News Feed ranking algorithm, the researcher found, led to a worse experience almost across the board. People spent more time scrolling through the News Feed searching for interesting stuff, and saw more advertisements as they went (hence the revenue spike). They hid 50% more posts, indicating they weren?t thrilled with what they were seeing. They saw more Groups content, because Groups is one of the few places on Facebook that remains vibrant. And they saw double the amount of posts from public pages they don?t follow, often because friends commented on those pages. ?We reduce the distribution of these posts massively as they seem to be a constant quality compliant,? the researcher said of the public pages.

So Facebook (and Twitter, and Reddit, and…) did get worse. Some part of that is because these platforms wanted to capture more value. But some part of that is because we — the people who want that next dopamine hit and the people who optimize for Internet fame — made it worse.

Wikipedia gets manipulated by motivated actors. The modern news landscape is atrocious but no one wants to actually step up and pay for news. Amazon is inundated with counterfeits that people will buy because they are cheap. AI slop is being pumped out constantly by people looking to get a quick buck. Enshittification, yes. But who’s fault?

Ok ok, one more. Slightly out of pattern.

The headline of this article is purposely a bit clickbaity. I could have made the headline something like "Internet platforms get worse in part due to adversarial incentives inherent to living in a capitalist society" or whatever, but then you wouldn't have clicked on the damn article! And I want you to click on the article, because I want you to subscribe! So I'm making the Internet slightly worse by being slightly selfish. Multiplied by a billion, and throw in increasingly powerful AI and increasingly unscrupulous actors, and you get the state of modern communication on the web.

Recently,

wrote about the general theory of enshittification, and how it has driven animus towards Silicon Valley.Suppose you run a business whose product, whatever it may be, is subject to network effects: the more people using it, the more attractive it is to other current or potential users. Social media platforms like Facebook or TikTok are the currently obvious examples, but the logic works for services like Uber or physical goods like electric vehicles too.

What’s the profit-maximizing strategy for your business? The answer seems obvious: offer really good value to your customers at first, to build up the size of your network, then enshittify — soak the customer base you’ve built. The enshittification could take the form of charging higher prices, but it could also involve reducing quality, forcing people to watch ads, etc. Or it could involve all of the above.

The Biden administration made some efforts to regulate tech. In part this reflected a perception that the big players had turned their focus from innovation to exploiting their locked in customer bases — a process memorably described by Cory Doctorow as enshittification. In part it represented growing awareness of the psychological and social harm often associated with internet use.

And like Wall Street tycoons a decade or so earlier, tech bros responded with rage. Was this rage performative, a warning to politicians who might be tempted to support regulation? Or was it genuine outrage at the idea that anyone might criticize their brilliance and benevolence? Yes.

Like Wall Street earlier, tech had genuine reason to fear increased regulation, because — probably as a result of enshittification — it no longer had the broad public support it once enjoyed.

First, some important context. I've worked in spam and abuse at Google, and I have friends who have worked in trust and safety at Meta and Twitter. Anyone who has worked in any spam and abuse role knows that these platforms are under fire and adversarial attack basically all the time. Which makes me think Krugman’s take is way too simplistic. “Enshittification is their fault. They are doing it on purpose because <moral failing>.” Krugman is definitely pointing to a real phenomenon, and his writing is insightful as usual. But like many of those critical of the modern tech landscape, Krugman only points the cannons of his criticism outward.

Point me to some form of enshittification, and I'll find someone abusing the system that is making it worse for everyone. Honestly, most of the time I can't even blame them. Sure, I'm not personally like trashing my Airbnbs and then suing the host, or whatever. But I share my Netflix password, or use other people's promo codes, or use student discounts when I'm no longer a student. We all have incentives. Some of those incentives are things like "juice network effects and moats" to "squeeze money out of a locked-in consumer base." But some of those incentives are things like "take advantage of social trust," or "defect in a prisoner's dilemma." Complaints about the decline of Wikipedia underscore the point – wiki is a modern miracle, but it is also constantly under attack from politically motivated forces that are well outside Wikipedia’s control. Is this ‘enshittification’?

None of this is new. Some people call these "coordination problems"; some call it Moloch. It's all been with us as long as we've been around. Enshittification is just the latest round of the euphemism treadmill. But I think with this latest turn, most of the discourse has centered on how the evil mustache twirling corpos are rounding up their monopolies and taking advantage of folks who are stuck because said corpos are the only game left in town. And corporate greed is certainly part of the problem. It's just not the only thing, or even necessarily a majority of the issue.2 I haven't ever seen anyone call out SEO experts for essentially making a career out of adversarial pollution of the commons. To me, SEO is roughly equivalent to dumping toxic waste in a lake.

Ok, so what? "The problem isn't this, it's that" who cares? The platforms are still getting worse, the Internet is still in decline.

If we want to prevent enshittification – as Krugman presumably does – we need to be thinking about the problem in totality. All existing solutions to enshittification focus on wrangling the big corporations. But that's insufficient; those people are already pretty motivated to create decent products! The key insight from the above framing is that you also need to change the behavior of users as a class, by working backwards from their pre-existing incentive structures.

Take the SEO spam example. One thing that immediately stands out to me is that SEO is a race to the bottom, a classic prisoner's dilemma. If no one uses SEO, the search engines get better and the best content floats to the top. But in that environment, the first person to start using SEO immediately captures a ton of personal value at the cost of making search worse. And that of course encourages all competitors to start using SEO too! Which essentially has everyone worse off than they were before — now everyone is spending a ton of money on SEO and getting 0 relative value from it, and also the search engines are worse.

Google can't exactly step in and stop this. There's no way for Google to unilaterally tell everyone to put down their guns. But this sort of coordination problem is exactly where the government could get involved. In a capitalist society, the government prices and internalizes negative externalities and solves coordination problems! No one wants to be the first person to put down their weapons; in theory, with government regulation, everyone can save their SEO budget and we'll all be better off.

Or consider the virality-engagement personalized-feed problem. I don't think anyone likes the attention economy. You get more clickbait, worse content, your brain gets totally hijacked, you end up feeling mad and depressed, and your whole day is wasted from doomscrolling. I would not be surprised if future generations saw algorithmic personalization as equivalent to how we see cigarettes today. And I also would not be surprised if future generations regulated such feeds accordingly. Existing software platforms have the same sort of prisoner's dilemma as in the SEO case. If you are a consumer platform that refuses to do algorithmic personalization because you do not want to create an addiction in your users, you lose people to the (unethical?) companies that have no such scruples. So you have a race to the bottom, where all the big platforms are trying to develop the most addictive patterns possible. Again, the government could easily step in here. Make the big social media platforms put down their weapons through regulation. Suddenly we all get our brains back, and the social media platforms no longer have to spend massive amounts of money training ML models to optimally addict their users.

The libertarian in me tends to be a bit wary of government intervention, so let me be clear about what I am not saying. I don't think the government should be allowed to say things like 'any SEO is banned' (what would that even mean? Is just having a webpage at all considered SEO?). I don't think the government should be allowed to say that any personalization is also banned (curating a set of streams, news sources, and people to follow is a form of personalization, and seems obviously beneficial). Both of these proposals give politicians too much ability to censor. Instead, I'd want more subtle solutions. For example, the government could mandate that algorithmic personalization is opt-in by default, with a big warning sign about the addiction risks over the toggle.

The problem with these kinds of policy proposals is that they are, for the most part, very specific. Fixing the warped attention economy incentives on Meta isn't going to fix the bad customer and host behavior on Airbnb. There's no silver bullet for moloch, smart people have to do a lot of hard work to defeat each of its many arms. But I think this is more directionally accurate than focusing ire exclusively on the big tech companies.

Krugman has yet to publish his proposed policies, so it's quite possible that we end up aligning in the end. Still, given the tenor of discourse, I suspect it won't properly consider the role we all play in the enshittification of these platforms.

Caveat: I think it's totally consistent for people to say that the actual problem is the engagement in the first place. I think algorithmic personalization is very addictive, so I'm sympathetic to this. I'm more directly targeting people who like to engage, but complain that the content isn't good or whatever.

I’m reminded of the progressive left opinion that groceries cost more these days because of price gouging from grocery stores. I don’t think this has really empirically borne out, but people like to hold onto this fantasy anyway. Now, the executive teams at Wegman’s or whatever are probably more highly paid than ever before. But that speaks more to do with the decreasing power of labor than it does taking advantage of the customer.

The most effective government intervention would be to ban paid advertising / paid promotion. Paid advertising, unlike SEO, can be banned because it can be defined clearly. It would fix the perverse social media incentives to maximize engagement, and it would solve the price floor issue of “i can’t compete with someone who does it free with ads”.

We've mostly lost the idea of a sellout, someone looked down upon for using their talents to make more money in a way that harms the world instead of producing useful or good things.

I think there's a compounding effect on society when people view a job that produces no value or negative value (SEO specialists, rent seekers, etc.) at parity with one that does produce value.

More people work for institutions that aren't making life better. People feel that institutions are taking advantage them. People then feel justified taking advantage of systems. Systems get worse.