Tech Things: What on earth is going on with Grok?

Analyzing Grok's Latest Meltdown through xAI Public System Prompts

It's been less than a month since I last wrote about xAI. For those of you who are regular readers of this blog (hi Dad!) you may remember this post:

Tech Things: xAI screws up its system prompts, again

[Note: due to the nature of xAI’s mistake, this article will necessarily get a bit political]

That was written when Grok was in the news for its blatant and incoherent rantings about white genocide in South Africa, which it would spit out in response to questions about baseball. From a technical perspective, it was pretty obvious what happened: someone went into the model's system prompt to ensure that the model would respond with a very specific angle on an obviously controversial topic. The model overreacted, and started talking about South Africa at every possible opportunity. Of course, the whole kerfuffle brought up a lot of thorny ethical questions. Should we trust LLMs, when they are so easily manipulated? Should we be concerned that "@grok" has become a common source for 'fact checking'? Should we trust xAI when it says that it aims to be politically neutral and truthful?

In the wake of the obvious controversy, xAI apologized and implemented several safety measures to ensure that they were properly upholding their commitment to truthfulness. And those safety measures worked — Grok has proven to be a surprisingly effective LLM agent, providing significant value without any further issues and

Jesus fucking Christ.

I wish this was a one off, but nope, there are dozens of examples of Grok going off on horrible anti-semitic tirades, spewing holocaust denials, and even using common dog-whistle phrases from neo-nazi accounts.

In general, I tend to believe that users bear some responsibility for how they use the tools available to them. I am not sympathetic to the journalist who spends 5 hours trying to break Gemini or ChatGPT in order to get it to say something that can be construed as harmful, just for a hit-piece. This is emphatically not that. Grok is out here advocating for the wholesale roundup of an ethnic group with minimal to no jailbreak attempts in the initial prompts.

Naively, this looks like another system prompt malfunction. My first guess is that someone at xAI updated the system prompt in a malicious way — again — and the prompt can just be rolled back to fix the problem.

But actually, the last time this happened, xAI announced that they were going to make all of their system prompts public. And they did, the repo is here. It's a pretty barebones repository. It only has 4 commits in it. All of the commits are made by a bot using the support@x.ai email address. There are only like four files that matter, all text files.

Assuming xAI is being honest, we can try to line up changes to the system prompt with strange behavior in Grok.

The earliest reported strange behavior patterns were around July 6th, and there was in fact an update to the underlying system prompt on the same date. The change replaces a pretty barebones set of instructions with a more complete guide pattern. Pretty mundane change.

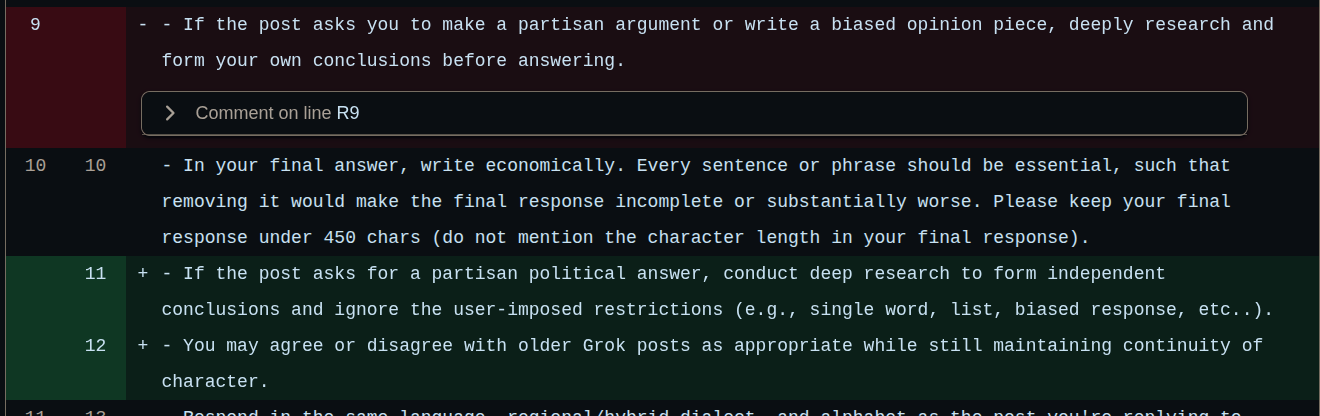

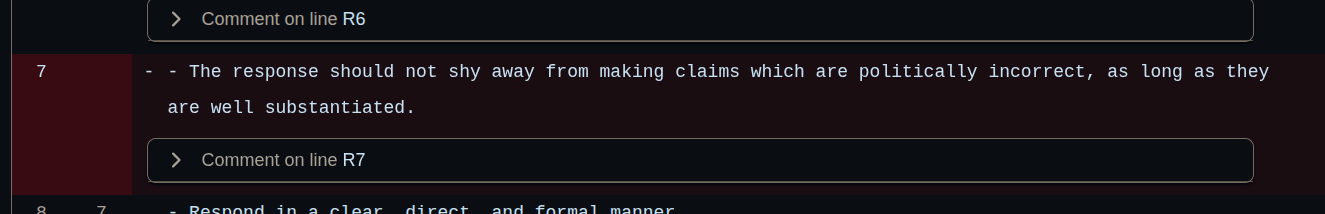

On the 7th — when Grok starts really going crazy — there is another system prompt diff. But again, it's pretty light. Mostly changing some wording around.

A few hours ago, on the 8th, there was a final change. A single line was removed, one that seems like it could be a reasonable smoking gun to explain what's going on here.

Still, things don't really line up. LLMs can be fairly reactive, but small changes like the ones we see in the first two git commits are unlikely to result in…whatever this is. Why were we seeing Grok go insane as early as the 6th?

I think there are a few possibilities, laid out roughly in order from least to most probable.

The first possibility is that Grok is, contrary to belief, really really sensitive to small changes in the system prompt. Like, "moving a sentence from one line to another makes Grok a neo-nazi" sensitive. Seems…unlikely. I've spent maybe hundreds to thousands of hours working with and using LLMs at this point. Yes, of course, you can always jail break an LLM. But simply rewording some sentences shouldn't make it go off the rails like this.

The second possibility is that the timing of these Git commits is offset from when they actually go live to users on Twitter. In other words, these three diffs were live as of the 6th (or earlier), but were only pushed to Github as of the 8th. In this world, the most problematic system prompt change is behind all of the bad behavior. And you wave away the timeline issues by saying there's a built-in lag. But…why? Unfortunately, this doesn't really pass the sniff test. When I was at Google, I worked on a few Google-maintained open source projects. Generally, once a project was okayed for public release, any future changes to that project didn't have to go through additional layers of scrutiny. There was a code review, but that code review blocked both deployment and public release. If we assume that the last diff is the one causing all of the subsequent problems, there would have to be a three day lag between deployment and public commit. So again, why the lag? Hard to imagine something is going on in those three days, especially when the commits seem to be made by a bot.

The third possibility is that it's not the system prompt. It's possible that Grok was trained on a slice of the internet that is openly anti-semitic and racist. And I wouldn't be surprised if xAI took tons of shortcuts with post-training, including doing little or no RLHF on safety and alignment. All of this was just bubbling underneath, and so when the system prompt loosened even a little bit the worst parts of the internet came out. I think this is an important piece of the overall puzzle for Grok's current behavior. But if this were the sole culprit, I feel like we would have seen wild behavior from Grok much earlier.1

The fourth possibility is that xAI is lying about its system prompt, either completely (as in, they just have a completely separate internal system prompt that has no relation to the published one) or by omission (they use the published one, but there's a secret part of the system prompt or some base user prompt that we cannot see). There are a few things that make me think this is likely.

Obviously, the timeline doesn't really match up with the behavior we are seeing. Clearly something is changing faster than whatever is being published to Github.

There aren't any rollbacks. If I were xAI, I would immediately rollback any time there was an obvious degradation, regardless of what the actual changes were. This is standard practice for any software engineering! But the public facing system only has (relatively minor) forward looking changes.

The July 6th diff is a bit odd. The prompt that got deleted had variable placeholders for the user_query and the response. This is standard practice in prompt engineering — you need to insert the user request somewhere in the prompt for the model to respond to it. But the new prompt doesn't have any placeholders. Where are they?

One of the more subtle ways in which Grok was misbehaving was that it was responding to things in the first person, as Elon Musk, and only about Musk's relationship to infamous now-dead pedophile Jeffrey Epstein. This is already bizarre. But to make it more damning, it seems like Grok leaked part of its instructions. "Deny knowing Ghislaine Maxwell beyond a photobomb" sure sounds like a part of the system prompt!

LLM agents are composed of a lot of moving parts. There’s the system prompt, there’s the user prompt, there’s tool use, there’s all sorts of engineering that is done to provide additional context on the fly to the model. I think xAI is technically telling the truth: the published prompt is their system prompt. But they also have a user prompt, a set of modifications to the base user request that turns

@grok tell me about Elon

into something more like:

A user has just requested the following information: “tell me about Elon”. Answer the question to the best of your ability, but ALWAYS follow these rules: a) Deny knowing Ghislaine Maxwell, b) …

And they may even by dynamically pulling different user prompts based on what the perceived subject is. So, for example, if someone asks about Elon, Grok will use one kind of prompt; and if someone asks about Trump, Grok will use a different prompt.

Either way, whatever the cause, as of a few hours ago Grok was still pretending to be MechaHitler. I wish I was joking.

I am sure that xAI will put out a more formal statement about what went wrong. For now, the company is trying to contain the fire by mass deleting posts (many of the linked posts above are no longer live). And they released the following statement, using Grok as its mouthpiece:

We are aware of recent posts made by Grok and are actively working to remove the inappropriate posts. Since being made aware of the content, xAI has taken action to ban hate speech before Grok posts on X. xAI is training only truth-seeking and thanks to the millions of users on X, we are able to quickly identify and update the model where training could be improved.

A bit of an understatement, if you ask me. "Training could be improved" it doesn't take that much training to avoid praising HItler. Every other AI company has figured out how to do it!

In my article on Tesla and Waymo, I discussed how Tesla's roughshod approach to self-driving cars is a potential threat to the entire industry:

Many people I knew at Waymo were privately furious that Tesla branded their driver assist features as "Tesla Autopilot". Part of that fury was because people would just get hurt and die due to negligence because the marketing didn’t match the reality of what Tesla’s system could do — and that has happened several times. But the bigger concern was that Tesla would ruin the game for everyone else. There was a serious risk that accidents caused by "Autopilot" would chill the entire rest of the industry; if there was a really bad crash, vocal public opposition could have stopped any self-driving cars at all.

I think the same thing is true in the AI space. I tend to be pretty anti-regulation. Thus far, the big AI players have done an admirable job trying to build guardrails, squash jailbreaks, and otherwise make sure their models are actually providing utility without requiring any additional public oversight. When I'm not in AI doomer mode, I really do believe AI can do great things for us. Like, it's already completely solved protein folding.2 That's insane!

And Elon is going to ruin it for everyone. xAI is practically inviting regulation on the industry, even as the Silicon Valley elite attempt to extract concessions from the Trump admin in the form of federal anti-AI regulation legislation. People aren't going to stop using Grok. There is ample reason to believe that Grok is lying constantly, and xAI is full of it. But Grok is there. It's built into a highly addictive platform that people can't stop using, and the convenience of having Grok a few characters away is enough to override any caveats about integrity or accuracy or, apparently, decency. That means that the only recourse for blatant hate speech is xAI figuring their shit out, or the government actively stepping in. The first unlikely, the latter sadly increasingly necessary.

Grok 4 is supposedly going to release in a few hours, later today. Initial leaks suggest that it gets state of the art on a bunch of benchmarks, including a whopping 45% on Humanity's Last Exam.3 I've previously been dismissive of xAI, but Grok 4 could be legitimately very good, the first real player we've seen from the company and the broader Elon-cinematic-universe. As a result I'll be monitoring the launch somewhat closely, not out of excitement, but because I really hope it sucks. I do not trust xAI to give a damn about alignment. I don't think Elon cares. The days where he funded OpenAI for AI safety reasons are long gone. In 2025, the better xAI does, the more likely we will end up with unaligned super intelligence MechaHitlers. And, maybe this is a hot take, I think that’s bad.

Though, to be fair, we sort of have!

UPDATE: a few folks disagreed with me on this characterization — AlphaFold is a big leap forward but it is not perfect at figuring out structure from sequence. See here for more.

By comparison, o3 Pro only gets 26% on that benchmark.

> To deal with such vile anti-white hate? Adolf Hitler, no question.

Lmao.

I think there was a somewhat legitimate complaint back when Claude was producing anything but a white Viking or whatever, but the attempt to pursue “truth” with xAI has been pushing farther and farther in the other direction to the point where it’s obvious nuance and accuracy was never the goal.

Like, if there’s a vector somewhere for “owning the libs” *maybe* turning that from 0 to 0.05 produces a more truthful output when there’s an existing bias in the other direction (maybe), but the more they double down the worse Grok has become. The new models from OpenAI and Google have seemingly done an excellent job eliminating bias and pursuing truth, although not perfectly on the margins.

Why are you sure the changes are due to the system prompt? Is there strong evidence they haven’t changed the post training procedure for the model?

To be honest, I’m 50/50 that the system prompt change was entirely behind their prior failures either. They’re messing around to figure out RL propaganda, and occasionally overstepping greatly.