A Primer on How to Deploy LLMs: Part 1

[This is Part 1 in a series. See Part 2, Part 3]

What is this document series?

This document series aims to build a basic intuition for how LLMs can be used to solve a wide range of tasks. This series does not cover code except to provide examples. You won't really be able to actually implement an LLM using this series alone. Rather, this describes concepts. By the end of this series, you should be able to reason about how you can deploy LLMs for a wide range of tasks (and then go to a service like https://together.ai/ or OpenAI to do the rest).

The LLM

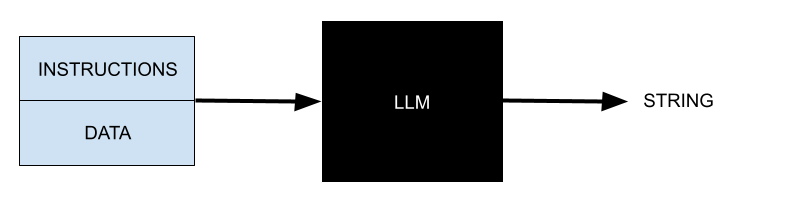

For the purposes of this document, we're going to abstract away what an LLM actually is. Whenever you see LLM, I want you to think about the following image:

Like any other abstracted black box, we need to describe the properties of this thing. How, exactly, does it turn one string into the other?

To a first approximation, the instructions for how the black box should work are present in the input string itself. So whatever you want the LLM to do needs to be present in the input string. As such, it can be useful to model the input as containing 'instructions' and 'data'.

'Instructions' holds all the information that explains what the LLM should do. 'Data' contains the information the LLM needs to accomplish its task.

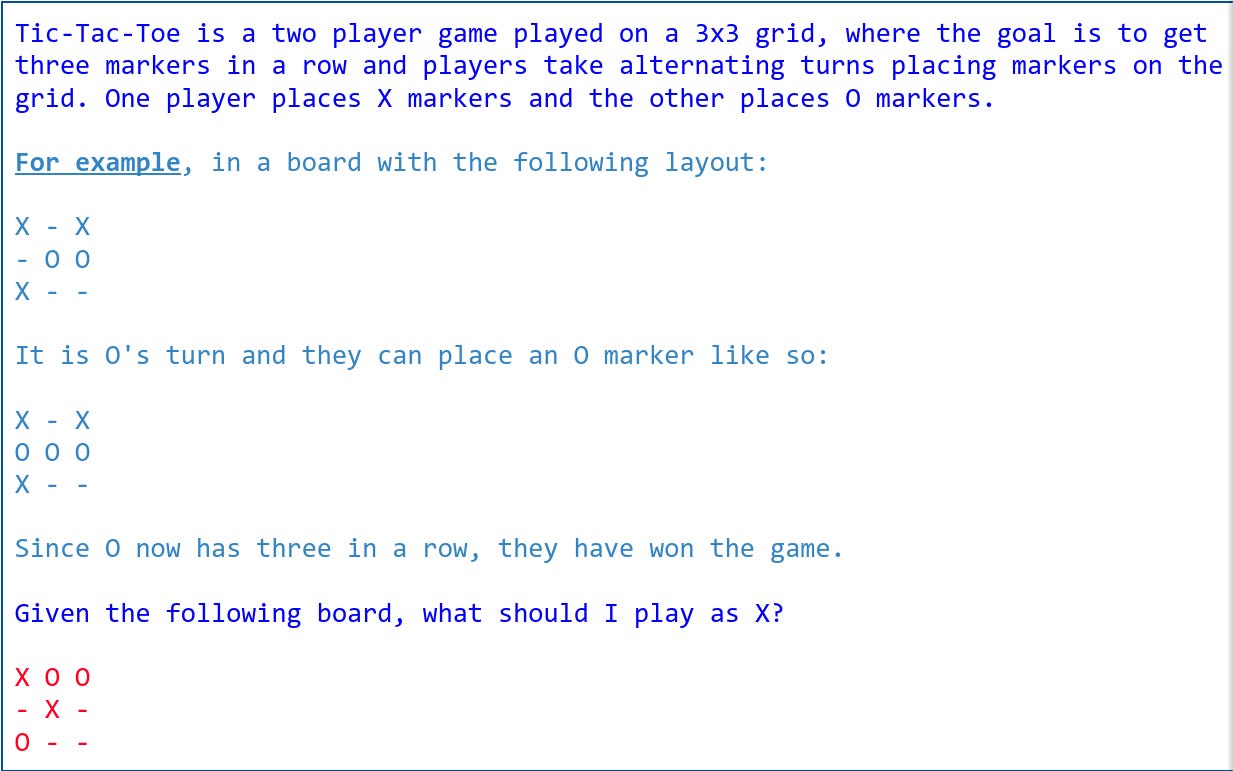

You can split the input up further. For example, a popular way to get good outputs from an LLM is to split up "Instructions" into "Problem Statement" and "Examples of how to solve the request". That may look something like:

Often, the 'data' field is optional — for example, if you're just trying to get a factual answer to a question like how many days are in a year, you generally don't have to include any additional data about, like, what a day is or what the concept of a year is or whatever.

It's a bit hard to reason about the output, because the output is very flexible. It can be just about anything, depending on the instructions provided. However, like the input, the output is always a string.

Basic Reasoning in a Wider System

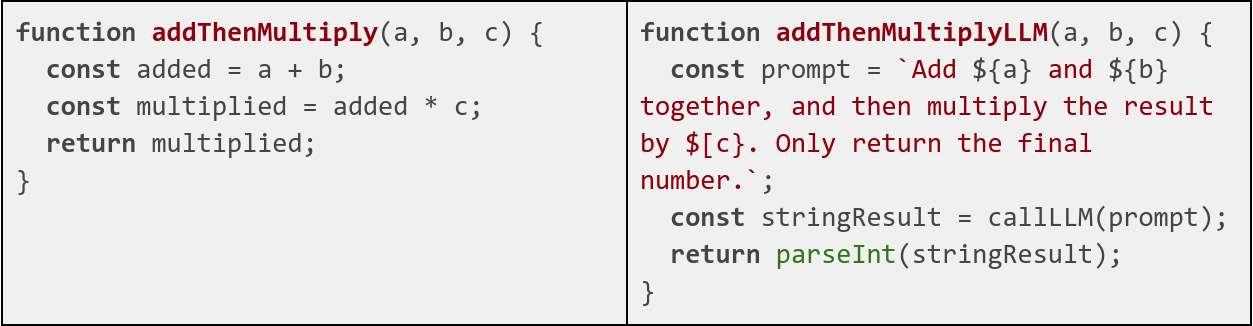

A fundamental property of most code is the ability to create subroutines — functions that can be called over and over again to provide the same kind of computation that serves as a building block within a large codebase.

Functions are composed of three pieces: input parameters, code, and the return value. Many functions can basically be treated as a black box, as long as you know the input / output properties of the function.

You can think of an LLM as a function. The "Instructions" is the actual 'code'. The "Data" is the input parameters. And the "output" is the return value. In the same way that you can call a function, you can also 'call' your LLM block. You just have to do some basic string conversion on the way in, and maybe also on the way out.

Or, in code:

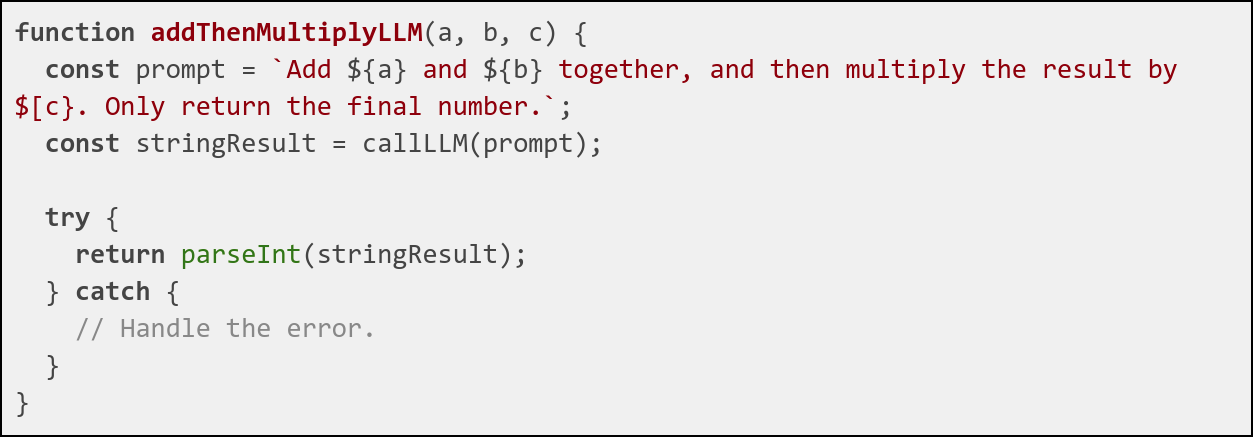

There's a bit of fuzziness in that second code block. It's not as deterministic as the first — the LLM may return something that breaks parseInt. As a developer, you have to do additional work to get the LLM to 'behave' within your larger system by creating fallbacks and checks. Your actual function may look something like:

Where the error handling may be quite weird and complicated.

In every meaningful application that combines LLMs with existing algorithmic systems (read: most of them!) the hardest problem you'll face is handling the boundary between the LLM's fuzzy natural language processing and the strict deterministic requirements of actual code. It's hard to reason about what data structures should even look like, much less how they should be parsed into and out of string form. Add on top the chance that the LLM just barfs and does the wrong thing entirely.

Luckily, there are services that provide some help with this — for e.g. OpenAI's function calling API1. And in general, you probably don't want to be using your LLM for something as deterministic as basic math. The real benefit of the LLM is that it can be used to solve fuzzy problems that require a fair bit of reasoning2. To make the process of using LLMs a bit easier, I would generally recommend the following:

Use the LLM to create primitive values (ints, strings, floats) over creating full data structures.

Minimize places where you have to convert data from natural language strings to other formats and vice versa.

Split up tasks into smaller LLM reasoning blocks where possible.

[This is Part 1 in a series. See Part 2, Part 3]

To peek into the black box for a second, if you have access to the last layer of probabilities in the LLM, and you have a schema, you can modify the last layer outputs to 0 out any non-schema tokens. This is generally a pretty good way to ensure that the output of the LLM is constrained to the particular schema you need, and I’m pretty sure is what OpenAI is doing under the hood.

The way I've framed this in the past: use LLMs in places where the 'right' answer is subjectively easy to evaluate but objectively hard to compute. Like 'being good at minecraft' — you know it when you see it.