Tech things: Deepseek, but make it cheaper

I.

A few years ago, around 2018-2019, there was a study that was making the rounds about how smiling would literally make you happier, regardless of your current mood. If you google 'smiling makes you happier' there are a dozen articles from all the usual places — NBC, NPR, LATimes, as well as a bunch of content mills that are mostly just reposting whatever is popular to get clicks.

This seems to be one of those stories that does the rounds semi-regularly, mostly pushed by reddit bot accounts looking for karma or journalists without a story. I definitely heard this little factoid much earlier than 2018, and with a bit of digging I found a NYT article about this phenomenon from 1989. I guess everyone has slow news days.

I was always really skeptical of this claim. For a long time I just assumed the relevant studies were flawed in some way and that the authors were reading the data backwards, much like the now-infamous hungry judge effect.

But oddly enough the smiling thing seems to replicate.

We don't really know why smiling makes you happier, but the leading theory is that it's pattern recognition. Your brain knows that smiling is associated with a positive mood, but your brain is also not particularly picky about causality, so the wires go both ways and the act of contracting your facial muscles results in a mild shot of serotonin and dopamine and other endorphins. It's very 'pavlovian dogs'.

Obviously, the results are subtle. Smiling isn't going to cure depression or PTSD. But still, I think this is really, really weird. The brain isn't supposed to work this way. It would be like reading a really complicated math problem, not really making any progress, and then saying 'a ha!' out loud in order to force yourself to have a eureka moment and solve the problem. Like, what.

II.

The earliest example of prompt engineering was this tweet.

Back before AI models were good at generating images, they were only ok at generating images. Most of the time you'd get things that were low resolution and kind of munted.1 But a few enterprising researchers / hackers realized that you could get the models to output significantly better images by simply appending the phrase 'unreal engine' at the end of your text input.

The reason this works at all is because these AI engines are all pattern matchers.2 In the course of training, the models learn that images tagged with 'unreal engine' have this particular high res glossy sheen to them. So when they see an input tagged with 'unreal engine', they output images that look…well, like unreal engine stills.3 This little trick basically kicked off the entire field of 'prompt engineering' — that is, figuring out what words to use to get your model to do specific things, often based on a deeper understanding of the kinds of patterns it likely picked up.

Prompt engineering is very popular in the generative image community, and it's shockingly in depth and technical. In addition to all sorts of plain-text tags, people experiment with everything from weighting different words in the same prompt to playing with the number of sequential commas as a way to get the model to do what they want.

Still, this isn't reasoning. This is just, like, getting a particularly strange search engine with a particularly strange syntax to return the right results.

III.

A week ago a group of researchers published S1: Simple test-time scaling. This is possibly one of the funniest papers I've ever read. It's funny for two reasons: first, because it's brilliant in a way that makes no god-damned sense. And second, because the result is roughly on par with OpenAI's O1, for 0.0005% of the price.

The key insight from the paper is this paragraph:

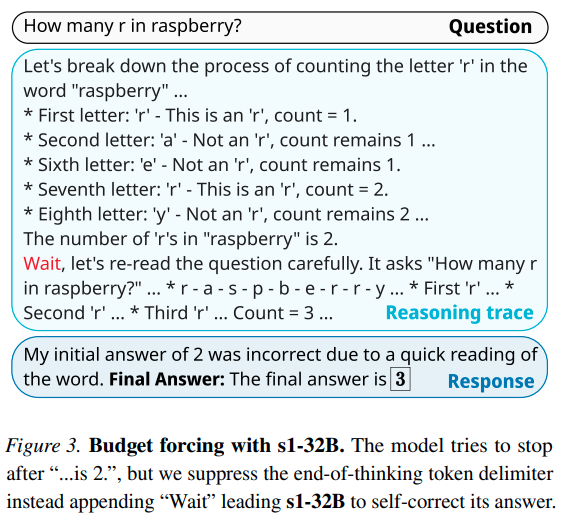

We propose a simple decoding-time intervention by forcing a maximum and/or minimum number of thinking tokens at test time. Specifically, we enforce a maximum token count by simply appending the end-of-thinking token delimiter and “Final Answer:” to early exit the thinking stage and make the model provide its current best answer. To enforce a minimum, we suppress the generation of the end-of-thinking token delimiter and optionally append the string “Wait” to the model’s current reasoning trace to encourage the model to reflect on its current generation. Figure 3 contains an example of how this simple approach can lead the model to arrive at a better answer.

This is, frankly, amazing? The fact that this works at all is insane.

I think what's happening here is that these models are learning language statistics. That is, they try to figure out what words are most likely to come after a given prefix. And it turns out that the most likely thing to come after the token "Wait" is some kind of inductive leap in reasoning. So you can prompt engineer your way to a better reasoning process, the same way you can prompt engineer your way to a more high def image.

But in a more meaningful sense, this is "smiling to make yourself happier" on steroids. They are literally fabricating eureka moments by doing the LLM equivalent of saying "a ha!" out loud.

And it's such a simple intervention! The authors don't even really have to do much additional training, this just works out of the box. Actually, we can be even more precise: the authors spend only $50 to build S1. By comparison, Deepseek R1 supposedly cost $5M, and OpenAI O1 supposedly cost $100M.4

IV.

Deepseek was released on Jan 20th. About a week after its release, Deepseek became the number one most downloaded free app on the App Store. This triggered a lot of hand wringing in the markets — NVIDIA lost a full $600B in market cap, and tech stocks in general were down.

At the time, I wrote:

To be clear, the fact that Deepseek exists isn’t really that significant. Everyone always knew there was going to be a big Chinese model. The country is not afraid to wall off it’s populace from the West; it was never going to allow LLMs that are happy to tell people about Tienanmen Square and Winnie the Poo and Taiwan. So when the first Chinese LLMs came on the market, everyone was like, “Yea, whatever”. The first batch, the Qwen models from Alibaba, were, like, fine.

Even though I expected a set of LLMs to arise thanks to protectionism and state-interest, I (and everyone else) assumed those models were just going to be worse than the US ones. This is how technical development has always been, after all. Google Search is good, Baidu is eh, and if you can use Google over Baidu you do. Amazon is good, Alibaba is eh, and if you can use Amazon over Alibaba you do. Facebook is good, Tiktok is eh, and if you can…no wait that doesn't work does it?

Anyway, the larger point is that no one really thought the Chinese models were a threat. Sure, people would talk about how the Chinese government was a threat, but it was always in a hypothetical way, mostly used to justify infinite capitalist investment without any corresponding concerns about AI safety or alignment. I don't think most people actually thought that a Chinese company would come out and deploy a model that is simply better than what we have in the states.

…

I think the last year has been one of a lot of existential angst among software engineers generally and AI engineers specifically. I've heard L6 and L7 engineers at Google talk about trying to find new skills because they think AI is going to take their jobs within a year or two. Now there's another layer on top of everything else. Maybe eventually the AI will take your job, but it's going to be outsourced to a Chinese AI engineer first!

A few days later, the market analysts caught up. NVIDIA, and to an extent Microsoft, Google, Meta, Amazon, and Apple, all benefit in the short term from "intelligence" being expensive. The more expensive it is to have an automated software engineer (or whatever), the better margins these behemoth companies can extract. Partly this is because it's a given that once you have AGI everyone is going to need access to it to stay relevant. What, are you not going to use the automatic PhD that answers all your questions and solves all your problems instantly? But partly, this is because the winner-take-all llm-infra-layer market hypothesis relies on 'good enough' intelligence being out of reach, or at least out of reach enough to result in consolidation. I've written about this too:

Going back to LLMs, I think you see roughly the same market dynamics. LLMs are pretty easy to make, lots of people know how to do it — you learn how in any CS program worth a damn. But there are massive economies of scale (GPUs, data access) that make it hard for newcomers to compete, and using an LLM is effectively free so consumers have no stickiness and will always go for the best option. You may eventually see one or two niche LLM providers, like our LexusNexus above. But for the average person these don't matter at all; the big money is in becoming the LLM layer of the Internet.

Everyone is investing in Google-OpenAI-Anthropic because they think one of those companies is going to win, the way Google won search. Deepseek was a double whammy for these investors. Not only was there now a new kid on the block who may beat all the old players, that kid was showing everyone else how to play ball too.

It may be the case that having a super intelligence is still out of reach unless you have super clusters of compute. But anecdotally, it seems that intelligence itself has diminishing returns for the average consumer. Most people get most of what they need out of GPT4o and don't really need the extra reasoning capacity of OpenAI o1.5 So the real threat to all these LLM providers today is if it turns out that it's easy and cheap to create an average intelligence. That might result in a lot of smaller companies creating competition at the provider layer, at least until the cost per token actually drives down to zero.

In this context, the S1 paper is potentially another significant blow to the moat claimed by these AI companies. If anyone can make their own o1 model for $50, what are we even doing with OpenAI?

V.

The research being done by labs both big and small are, for the most part, not mutually exclusive. Advancements are coming in a variety of complementary forms that drive the entire industry and experience forward.

A good metaphor for this kind of advancement is Moore's law. Very roughly, Moore's law states that the number of transistors on a chip should double roughly every two years.

Moore's law does not make assumptions about where those gains are coming from. Moore didn't say something like "chip capacity will double because we're going to get really good at soldering" or whatever. He left it open. And in fact in the 60-odd years since Gordon Moore originally laid out his thesis, we've observed that the doubling of transistors came from all sorts of places — better materials science, better manufacturing, better understanding of physics, all in addition to the (obvious) better chip design.

You can imagine a kind of Moore's law for intelligence, too. We might expect artificial intelligence to double along some axis every year. Naively we'd expect that improvement to be downstream of more data and more compute. But it could also come from better quality data collection, more efficient deep learning architectures, more time spent on inference, and, yes, better prompting.

It's amazing that the S1 researchers were able to compete with both O1 and Deepseek on basically no budget. The authors should win all the awards, or at least get some very lucrative job offers. In my opinion, though, the really beautiful thing about the S1 paper is that everyone else can immediately implement the S1 prompt engineering innovations to make their own models better. The existence and success of S1 suggests that there is still a ton of low hanging fruit to be picked.

It's tempting to believe that, in light of recent innovations, "big AI" is dead. Certainly that's what the entire rest of this essay might suggest. And yet… maybe I'm just being a contrarian, but I still believe that the LLM provider layer will consolidate. I think the consumer usage story still holds — when the cost of a token is 0, the market will simply consolidate around the best offering. That in turn makes me very bullish on Google, because their experiments on increasing the context window of their models does feel genuinely hard to replicate.6 More on that in a different post.

For now, though, I think we all need to take whatever timelines we have and halve them. For better or for worse, cheap automated general intelligence is coming faster every single day.

Thanks to my Australian friends for this fantastic addition to my vocabulary.

That's not to say they aren't reasoning. I'm increasingly convinced that pattern matching is reasoning.

For a minute this caused a pretty significant ethical debate, because pretty soon after discovering the unreal engine trick people also discovered that you could mimic the styles of other artists by simply appending their names to the input prompt.

There's reason to be skeptical of both Deepseek's and OpenAI's reporting here. It's unclear whether they are both hiding even larger models that are used to train the smaller models they actually release. This is a process known as distillation, it's very common and cost efficient, but can result in different people referring to different things when they say "cost of training". More here and here. This is on top of the usual reasons to be skeptical of whatever is coming out of China.

As the better models get faster I expect this to change — people will naturally drift towards the stronger models just based on vibes, the same way people naturally drift towards Google because the results feel better. But right now there’s a noticeable time-scale difference between o1 and GPT4o, so a lot of folks use the latter just because they are impatient.

Compared to the work that OpenAI has been doing, which, as seen, can be replicated.