Tech Things: Meta spends $14b to hire a single guy

Sure, they kinda sorta acquired Scale AI too.

[UPDATE: apparently Scale.AI employees received a dividend equal to a premium on the value of all of their stock. In other words, all of the employees were effectively bought out, but still retain their stock AND still have any upside if the company continues to do well. This neatly explains why the price tag was essentially the valuation of the company. It also increases my respect for both Zuck and Alexandr, the latter whom supposedly requested this deal so that Scale employees were not left out to dry. Much of the analysis in the rest of this article remains unchanged, but I feel that this is important additional context — much of the $14b did not go to Alexandr.]

I'm a bit late to this story because I got married two weeks ago, and if you've ever been to an Indian wedding you know that it takes at least a month to prepare for and at least two weeks just to recover from. A lot happened in that time, apparently we're at war with Iran now? Wow, crazy. I'll probably write about that at some point. For now I'm more interested in a smaller story that's already more or less fallen out of the news: Meta spent $14b to acquire Scale AI.

Ok that's not exactly true. An acquisition normally requires the purchasing company to buy out all outstanding company shares. Existing shareholders, including employees, have their stock converted to stock of the purchasing company, or to cold hard cash. In this sense, it seems Meta hasn't fully gone through with an acquisition. Instead, it has "invested" $14b in the company at a $29b post valuation, giving Meta only ~49% of the company. If you're quick with the math, you might realize that Scale's previous valuation before Meta was approximately $14b. Meta doubled the valuation, by giving them enough money to buy them out, but also didn't officially buy them out.

Scale is technically independent, as they claim on their blog. Scale employees still have Scale AI stock instead of Meta stock, though it is probably significantly diluted. Scale's board remains legally in power of the company.

But, also, come on. This is essentially an acquisition! Legally, an acquisition requires a certain set of arcane things to happen. Documents to be signed, filings to be filed, things like that. But colloquially, an acquisition happens when a company can no longer decide its own fate, and has to take marching orders from someone else. That's more or less what's happening here. If Meta wants something from Scale, what are the Scale folks going to do? Say no? The current CEO, Alexandr Wang, is leaving to take a job at Meta as part of the deal even while staying on the board! Between Meta's shares and Alexandr's shares (rumored to be around 15%), they have a solid voting majority.

This kind of deal is pretty rare. Most of the time, if you want to acquire a company, you just…acquire the company. Why go through all these extra hoops?

The answer is that Meta does not want the company. It wants Alexandr.

Meta doesn't need Scale's core business

The Meta / Scale deal is extremely similar to the Google / Character.AI deal from last October, and the Microsoft / Inflection deal from last March. In both of the latter two cases, the purchasing investing megacorp paid out a hefty sum to poach all of the talent, leaving the startup a shell of its former self. Technically still alive; in practice, a zombie. The poached talent ends up doing fairly well, landing sweet gigs as the leads of key innovation arms at the megacorp. The people who are left behind? Not so much. One of the big criticisms of the Google deal was that the founders made out like bandits — Noam, for example, is now one of the leads on Gemini and is likely clearing 8 figures in salary1 — while the remainder of the 150-person-staff at Character got screwed. Even though Character AI technically raised money, it was clear that the actual value of the extremely non-liquid Character AI stock had gone way down because all the people who made that stock valuable left as part of the deal.2

Scale AI does not make sense as part of Meta's portfolio. Scale is a B2B body shop. It farms out mass labor in the developing world to produce labeled datasets for ML training. It is a pickaxe and shovel business, Mechanical Turk as a Service. There is no special tech there, no critical IP, just sweat and logistics and data.

Now, one could argue that even if the rest of the company isn't useful to Meta, that data is valuable. I've written about how data and compute hungry LLM companies need to be; several of the big AI players use Scale datasets in some form, including Meta and OpenAI. I think it's a good argument in theory, but it dramatically overstates the importance of Scale AI. As far as I can tell, none of these companies rely on that data in any meaningful way. The LLM providers all work with several data providers, in addition to having their own labeling and simulation teams. Google, for example, has its own version of Scale AI called HComp (Human Computation) that is built entirely in house. Scale finds more play among the B-players in the AI world. Toyota. Etsy. General Motors. Various world governments. People who are late to the AI party or who are not tech-native; organizations that know they need AI, but don't really know how or what that means.

All of this to say, it seems extremely unlikely that Meta cares about Scale's core business. Meta, the consumer social media company, is not about to do a hard pivot to start selling data labelling services to other enterprises. And they also are not getting a lot of value out of hoarding Scale's datasets or preventing access to its competition, since its competition isn't dependent on Scale to begin with. And they definitely aren't treating this as a standard VC investment where they expect Scale to produce massive returns, since they are buying a huge chunk of the company at a very strange valuation multiple pretty late in Scale's existence.

All signs point to Alexandr as the key. Meta spent $14b to hire a single guy.

Meta is falling behind in the AI race

This, of course, just begs the question. Why on earth do they want this one guy so badly? I'm not sure there is a reasonable answer here. My own intuition says that this investment is kind of insane. But here is my best sketch of Meta's position.

I've said several times now that the LLM model provider layer is a winner-take-all-market.

Going back to LLMs, I think you see roughly the same market dynamics. LLMs are pretty easy to make, lots of people know how to do it — you learn how in any CS program worth a damn. But there are massive economies of scale (GPUs, data access) that make it hard for newcomers to compete, and using an LLM is effectively free5 so consumers have no stickiness and will always go for the best option. You may eventually see one or two niche LLM providers, like our LexusNexus above. But for the average person these don't matter at all; the big money is in becoming the LLM layer of the Internet.

And, as expected, there's only a handful of LLMs that matter. There's a Chinese one and a European one, both backed by state interests. And there's Google, Anthropic, and OpenAI6 all jockeying for the critical "foundation layer" spot.

The economics of LLMs means that it is critical for these players to have the best models. There's no room for second place.

Bluntly, Meta is not currently winning the consumer or developer markets. In fact, Meta isn't anywhere close. OpenAI has more or less dominated the consumer space, while Google and Anthropic have jockeyed for position among developers. Meanwhile, even though Meta has done some really good research (including the SAM model family), they haven't really been competitive at the top of the LLM AI world since the release of Llama 2.0 back in 2023.

That's not necessarily an unexpected or bad thing. Meta didn't have the AI expertise or compute necessary to really compete on producing LLMs 4 years ago. Instead of going toe to toe with Google's TPU stack and OpenAI's deep research bench, Meta strategically carved out its own niche by focusing on open source even as all of its competitors aggressively closed shop. And for a while, that was really paying off for them! Meta had a small but rabid contingent of open source developers, hobbyists, and researchers who were pushing the boundaries of what LLMs could do in all sorts of neat and exciting ways. The Llama family of models really did look like the Linux to OpenAI/Google's Windows and Anthropic's MacOS.

But the story for Meta changed pretty dramatically in late 2024 with the release of Deepseek. I covered some of the reaction here:

Tech Things: Inference Time Compute, Deepseek R1, and the arrival of the Chinese Model

When I was in highschool, I took the American Math Competition 12 exam, commonly known as AMC 12. The exam has 25 questions, multiple choice. The questions are challenging, but not impossible. Given enough time, I suspect most of the seniors at my fancy prep school would be able to do quite well on it. The problem is that you only had 75 minutes to comp…

A core feature of LLM tooling is the ability to quickly switch between different kinds of models. This drives the LLM provider market into intense competition and diversification and contributes to the winner-take-all dynamics of the space. Llama, for all of its early successes, was never able to build a real moat. It's open source! The whole point is that there's no moat, at least not in the traditional sense. So within a week of Deepseek's release, everyone had switched to using Deepseek. Because, you know, it was better. Deepseek's release was rough for OpenAI, which had to reduce the cost of their o-family of models significantly while also speeding up their deployment timelines. But Deepseek was and is existential for Meta. Forget about being the best LLM provider, Meta was no longer even the best open source LLM provider. Why would anyone use Llama at all? What's the value prop?

That's why there was a lot riding on the Llama 4 release. In general, there is a soft expectation that each new generation of LLMs beats all of the previously released models from all competitors. After all, companies get to choose when to release their models. No one wants to release a dud, so all the models that do poorly are consigned to the trash heap and you only ever hear about the models that do well on at least some benchmarks. Deepseek was released after Llama 3, and, as expected, performed better than Llama 3 on key benchmarks. So folks naturally assumed that Llama 4, once released, would be better than Deepseek.

Right, well…

Supposedly, Llama 4 did perform well on benchmarks, but the user experience was so bad that people have accused Meta of cooking the books. Even though the company officially stands by Llama 4, rumor has it that Zuck is furious.

Naively, it's not immediately obvious why Meta has not been able to keep up with the other top AI labs. At the start of the LLM race, Meta was certainly more established and better capitalized than either Anthropic or OpenAI. But if you know anything about Meta's internal politics, and specifically the culture at FAIR (Foundational AI Research, a lab started in 2013), the picture becomes more clear.

Meta is a company where the folks under Mark are more or less constantly jockeying for power and position. The management class has many folks who really should've stayed engineers but have been "promoted to their highest level of incompetence." Reorgs, back stabbing, and petty social games abound. I don't think anyone is necessarily cynically motivated — yes there are some ladder climbers, but that's true everywhere. I think most people are earnestly trying to make Meta a better company. But no one in Meta middle management knows how to be results oriented because they've been insulated from the winds of the market for their entire existence. Their only feedback is from their reporting chain. As a result, everyone is just kinda sticking to bad ideas that work for promo cycles but have no play in the real world. (Google had a similar problem. See the "slime mold" essay for everything wrong there.)

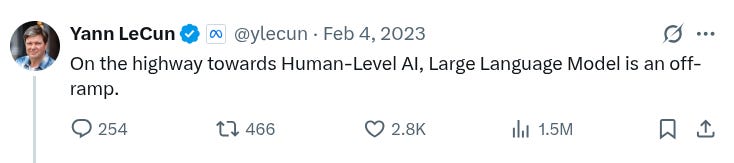

Within FAIR, much of the blame for this culture can specifically be put at the feet of Yann Lecun. I have to credit the guy, he's a brilliant researcher. But as the Chief Scientist at Meta and one of the original FAIR founders, he's remarkably set in his ways. Several years ago, Yann was adamant that Meta should build on torch in Lua instead of porting to Python, more or less because he liked Lua a lot. He ignored auto regressive modeling, arguing throughout 2020 that transformers were not going to amount to anything and discouraging work in that direction. My more critical friends who have worked with Yann think this is because he's salty that convolutional networks became less important by comparison. In 2022, the original Llama team supposedly had to go behind Yann's back, building their initial prototype in secret and then showing it directly to Mark. This is supposedly why Meta has a GenAI team separate from FAIR. And now, while FAIR is hemorrhaging the very same researchers that produced the Llama models, Yann continues to argue against the usefulness of LLMs.

This kind of leadership is, unsurprisingly, not going to result in state of the art LLMs. Add in significant institutional inertia around the Metaverse and Reality Labs, and the general lack of focus that comes with being a large company, and you get to where Meta is today: behind.

Zuckerberg is one of the few remaining founder-CEOs in big-tech,3 a man with an appetite for big swings backed by an unassailable voting majority on his own board. And, given all of the above, it seems like he is on a war path. There have been several reports of Mark personally reaching out to researchers at other companies with 8 or 9 figure salary offers and the promise to work directly under the CEO in a new "super intelligence" lab. A few of my friends at Google have reported getting cold emails with insane valuations. Matt Levine recently reported that the list of luminaries includes Nat Friedman and David Gross, both of whom have their fingers in many different AI startups; and TechCrunch wrote that Meta tried to acquire Ilya Sutskever's Safe Super Intelligence for $32b, almost definitely to just pick up Ilya himself in a deal similar to Scale's.

Against this wider backdrop, Alexandr — a friend of Zuckerberg's and, apparently, a shadow advisor to Mark about all things AI — was simply the first member of this new super team.

So if you think about it, is the 11 figures offered to Alexandr really that much more than the 9 figures offered to other leading AI engineers and researchers?

Yes. It is.

Is this the right direction for Meta?

Personally I think this is all insane.

Mark is correctly identifying the red tape and bureaucracy that is causing the AI efforts at Meta to fail. Creating a privileged super-intelligence team that has priority access to resources and a mandate to, well, "move fast and break things" is great. Investing billions in singular people is not.

It is extremely unclear if past success in AI research is at all correlated with future success, at least at the individual level. Many of the researchers behind critical advancements in the field are more or less one hit wonders. Goodfellow with GANs. Bahdenau with attention. Kipf with GNNs. Sitzmann with light fields. Schmidhuber with LSTMs. The LLM paper was written basically entirely by folks for whom "Attention is All You Need" is their singular claim to fame. Ashish Vaswani, the first author of "Attention is All You Need", has two orders of magnitude (~50x) fewer citations on his next most cited paper. Even among the big three — Hinton, Lecun, and Bengio — the former two have mostly been dormant.

In my opinion, only two people in AI have shown consistent foresight. Ilya Sutskever, ex-Chief Scientist at OpenAI, has demonstrated a remarkable ability to stay on top of the ball. He was a vocal proponent of auto-regressive learning and large neural network scaling well before either of these were popular, and his work with transformers has been, well, transformative (I'm sorry, I had to). And Yoshua Bengio, professor at U Montreal and head of the Montreal Institute for Learning Algorithms (MILA), has seemingly created a PhD program where generational talents continue to appear. I am consistently shocked at how many of those one-hit-wonders mentioned above routed through Montreal. It may be selection bias, but if so, Bengio should get into picking stocks.

There's a good reason you see so many one off successes. AI as a field does not have a unifying theory. It is, to a first approximation, all intuition and empiricism. People build mental models about what architectures and approaches may work and how various optimization problems are structured, and then test their hypotheses, and iterate towards a better-but-informal understanding. You get the best intuition by running the most amount of experiments. As a result, the labs that consistently put out the best work in AI are not staffed with a small number of incredible once-in-a-generation talents. Rather, they are filled with a lot of people who are deeply interested in similar problem spaces, and who are all constantly running their own experiments and sharing what they learn. Sometimes, one of those people hits the jackpot and AI as a field is changed forever. But it would be wrong to assign that individual person undue credit. It's the environment. And the backbone of such an environment is freedom to try anything and everything, a strong collaborative culture, and open access to near limitless resources. This was exactly how Google was set up for years, and it's exactly why Google was leading AI research for more than a decade (and arguably still is). As a result, I’m not convinced that even Ilya is worth 11 figures comp directly.

I think Meta can fix their problems. But I'm not convinced that the route to doing so involves poaching "superstars." Meta has a branding problem in the AI world. People don't want to work there even if they are being offered really good salaries. Paying even larger boatloads of cash to bring in specific people doesn't exactly fix that branding problem, it just looks desperate. And when the most prominent people being brought in to run things are folks like Alexandr — people who aren't researchers, aren't known or respected by researchers, and may have never actually trained a model — the whole project begins to feel rather misguided.

Still.

I have a lot of respect for Mark, both as a founder and as a person. Mark has had an immense amount of power and has wielded it respectably, especially when we see what the alternative could have been with Musk. Everyone I know who knows Zuck personally has very positive things to say, a rarity for his wealth class. And, in general, people who have bet against the man have lost significant amounts of money.

Of all of the big AI players, Mark remains the most dynamic and compelling CEO to me, which is why it's been a bit of a shame that Meta hasn't been able to keep pace. But if anyone can turn the ship around, I think it's Zuck, and so regardless I'm eager to see what comes of this newfound focus.

not that he needed it. Noam had been at Google for years, pre-IPO. He was already a multi dozen millionaire prior to spinning off.

I don't think this kind of 'investment' will ever become super common, since it rarely makes sense for an acquirer to acquire in this way. But I will say that I am not happy this has happened three different times, and I'd hate for this to be precedent-setting. Joining a startup is already a risky proposition. As a founder, you are making a promise to your employees to do right by them. Abandoning them for riches while they stay on a sinking ship feels gross to me.

Gates, Larry, Sergei, Bezos, and Jobs are all gone, after all.

I'm curious as to why you hold Zuck in such regard. I agree he is far more palatable than Elon, but my sense is that he is fundamentally self serving and self aggrandizing. I'm not as plugged into the bay as you are, but I feel like in my mind there is a firm distinction between the CEOs who eventually give a damn about their fellow man (e.g. Gates/Collison), those who just want to run a good business (Bezos/Cook), those who aspire to a vision (Jobs/Dario), and those whose first allegiance is their ego (Musk/Zuck).

I'm curious if you disagree with the dichotomy itself, where I put Zuck, or both.